Matt East and Jamie Wood

At the end of November, we reviewed the responses to the Active Online Reading project student survey so far. This was for a poster we were preparing for a Unesco Inclusive Policy Lab event on Education and Digital Skills. In an earlier blog post, we said that we’d provide some further preliminary analysis of the data that we presented in the poster.

In this post, we outline what we think the responses so far are indicating. We should note that this is by no means the entire question set, but the number of responses (over one hundred, depending on the question) point to some interesting trends. In what follows, we present the responses to five questions, accompanied by some brief preliminary analysis.

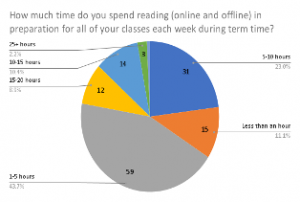

When asked about how much time they spent reading in preparation for all of their classes, students each week, nearly 55% said that they read for between zero and five hours, with another 23% reporting reading for between 5 and 10 hours (so, 78% of our student respondents read for under 10 hours per week for their studies). Of course, the reading load varies considerably by discipline, but this is striking, especially as staff may be expecting students to do much more reading each week and many of our respondents come from History degrees (generally regarded as more reading-intensive). Given that our sample includes a number of postgraduate students, who one might expect generally to read more than their undergrad peers, a later stage of our analysis will be to break down the data by level of study.

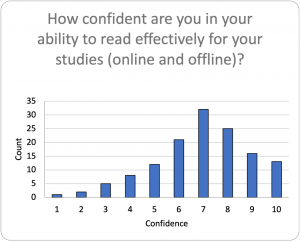

We asked another question about students’ confidence in their ability to read (both online and offline) for their studies, with students awarding themselves an average confidence score of 6.93 and 63.7% rating themselves at 7/10 or above. Such relatively high levels of confidence may help to explain the (from a staff perspective) reported low time spent on reading for study. If students are confident in their reading practices then they may perceive themselves to be efficient readers and/ or think that there is no need to change their practices.

We asked another question about students’ confidence in their ability to read (both online and offline) for their studies, with students awarding themselves an average confidence score of 6.93 and 63.7% rating themselves at 7/10 or above. Such relatively high levels of confidence may help to explain the (from a staff perspective) reported low time spent on reading for study. If students are confident in their reading practices then they may perceive themselves to be efficient readers and/ or think that there is no need to change their practices.

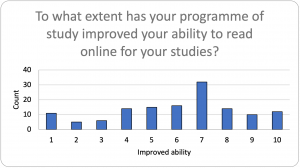

Given students’ relative confidence in their overall ability to read (online and offline) for their studies, the lower average rating (at 6.01/10) for course-related development in ability to read online for their studies is noteworthy. Perhaps this is related to the fact that pedagogies and resources for supporting students in reading online are underdeveloped in comparison to those for ‘offline’ reading. (Someone should do a project about that…!).

Given students’ relative confidence in their overall ability to read (online and offline) for their studies, the lower average rating (at 6.01/10) for course-related development in ability to read online for their studies is noteworthy. Perhaps this is related to the fact that pedagogies and resources for supporting students in reading online are underdeveloped in comparison to those for ‘offline’ reading. (Someone should do a project about that…!).

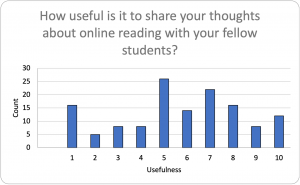

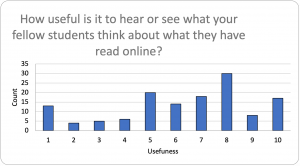

Finally, based on some research that we have done (East, Williard and Wood, 2022) into student experiences and perceptions of social annotation (i.e. collaboratively reading and commenting on readings), we wanted to know how students felt about sharing their thoughts with each other online. The responses to these two questions are summarised on the following graphs.

As you can see in the preceding two figures, we asked students about how useful they thought it was to share their thoughts about online readings with their fellow students (the first graph) and to see what others had written about their readings. It is interesting that students were markedly more enthusiastic about seeing what others had written (average rating: 6.3/10) than they were about sharing their own thoughts (average: 5.7/ 10). This aligns closely with the findings of our earlier small-scale qualitative research project, mentioned above, where students saw real value in having access to the thoughts of their peers, but were less keen to share their own views on what they had read. This isn’t particularly surprising, given students’ known reluctance to engage in collaborative working more generally (White et al., 2014), but it is interesting to see such findings confirmed in relation to online collaborative reading.

As you can see in the preceding two figures, we asked students about how useful they thought it was to share their thoughts about online readings with their fellow students (the first graph) and to see what others had written about their readings. It is interesting that students were markedly more enthusiastic about seeing what others had written (average rating: 6.3/10) than they were about sharing their own thoughts (average: 5.7/ 10). This aligns closely with the findings of our earlier small-scale qualitative research project, mentioned above, where students saw real value in having access to the thoughts of their peers, but were less keen to share their own views on what they had read. This isn’t particularly surprising, given students’ known reluctance to engage in collaborative working more generally (White et al., 2014), but it is interesting to see such findings confirmed in relation to online collaborative reading.

We hope that you find this short summary of some of our interim findings interesting. Since we did this analysis, we have received further responses to the survey and will be sharing the full results in due course via publications, blogs and at events. We’d be interested in knowing your thoughts about our first attempt to make sense of the data.

Reference: M. East, H. Willard and J. Wood (2022). Collaborative Annotation to Support Students’ Online Reading Skills. In. S. Hrastinski, ed., Designing Courses with Digital Technologies: Insights and Examples from History Education (New York: Routledge), 66-71.

If you’ve not done so already, please complete our staff and student surveys here. They will remain open until the end of January. Upon return in January, we will be undertaking further analysis on the academic responses, and qualitative feedback from the student survey.